How to Build AI Apps That Scale: 3 Expert Principles for Success

Scaling AI Apps: Key Findings

Key takeaways:

- 42% of companies are abandoning AI projects in 2025 due to systems that fail to scale beyond prototypes.

- Scalable success depends more on architecture than on model complexity.

- Modular, hybrid, and adaptive designs help teams avoid failure and build AI that lasts.

Want similar results? → Get a Free Quote

Across industries, AI apps are pitched as game-changers, promising to transform customer experiences, streamline operations, and unlock new revenue.

That’s true, but only sometimes.

In 2025, AI failures are on the rise. According to S&P Global Market Intelligence data reported by CIO Dive, 42% of companies have abandoned most of their AI initiatives, up sharply from 17% in 2024.

The reasons go beyond technical glitches.

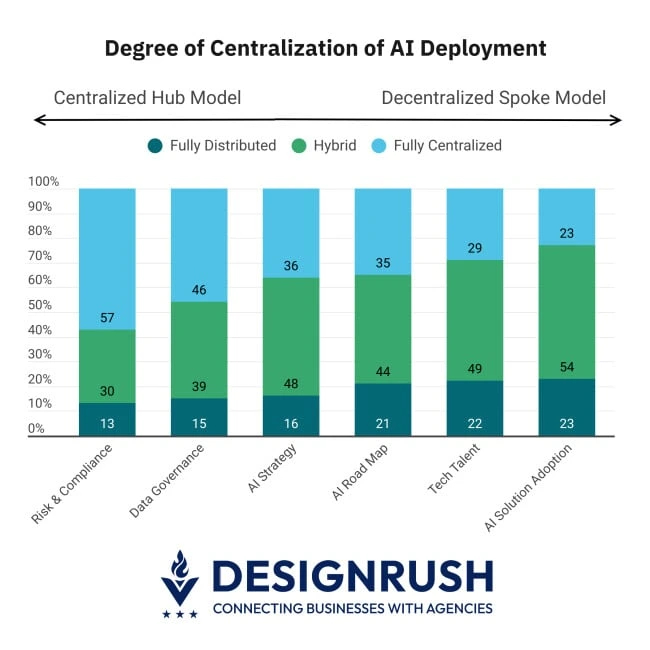

McKinsey’s 2025 State of AI report shows that organizational structure plays a major role in whether AI scales or stalls.

And the companies that succeed are the ones that stay organized around data and risk.

Even with so many abandoning AI initiatives, 58% of companies are still sticking to their AI plans, according to the same CIO reporting.

So what separates those who succeed from those who stall? Smart planning and architecture.

Flashy prototypes can win headlines, but sustainable systems require careful design choices that anticipate real-world constraints.

In particular, three architecture principles have emerged as critical for AI longevity.

These are what separate the AI apps that scale successfully from those that quietly collapse:

- Modular system design: Enables flexibility and faster upgrades without full rebuilds

- Hybrid compute strategies: Balances performance and cost by optimizing where models run

- Continuous learning workflows: Keeps models relevant through real-time adaptation and monitoring

Firms like Suffescom Solutions, a leading AI and Web3 consulting company, see across the companies they advise.

They know that success hinges less on model novelty and more on infrastructure that can bend without breaking.

“The biggest ROI from AI doesn’t come from the model itself," said Mr. Gurpreet Singh Walia, CEO at Suffescom Solutions.

"Rather, it comes from how well the AI development process scales, adapts, and stays reliable under pressure. Without that foundation, even the most advanced algorithms will collapse under real-world demands.”

So what exactly is causing these failures, and how can teams prevent them before it’s too late?

Let’s look at where AI systems typically break down at scale, and the architecture principles that prevent it.

The True Cost of AI at Scale

Big ideas aren’t the culprit behind these failures. Typically, it’s that the systems can’t handle the pressure.

And when they crack, the weak points tend to show up in three places:

1. Inference Costs

Large language models are computationally hungry. A prototype running a few queries may look smooth. But when millions of users interact daily, the bill can rival payroll. Organizations discover too late that the cost per request makes the business model untenable.

2. Model Brittleness

Models shine when tested against the same data they were trained on. Real life is messier. Customer behavior shifts. Market trends evolve. Even small changes in input data can throw predictions off.

3. Fragile Pipelines

Data comes from sensors, transactions, and human behavior; all of it is noisy and inconsistent. If monitoring is weak, problems go unnoticed until they reach the customer.

These failures can easily influence profit margins, as well as erode trust inside organizations and among end users.

In other words, they turn promising apps into team headaches and business liabilities.

Designing for Long-Term Scalability

Avoiding these pitfalls requires architecture built for growth.

Not every organization needs the same blueprint, but three important principles stand out:

1. Build with Modularity and Flexibility

AI systems should be assembled like Lego sets, not poured like concrete. Each component, from data processing to monitoring, should be replaceable.

That way, teams can upgrade or swap parts without tearing down the whole system.

Consider feature engineering. If pipelines are separated from model-serving layers, engineers can refine inputs without causing downtime in the app.

This adaptability reduces the risk of costly rebuilds, and research backs it up.

According to a 2024 study in the Journal of Manufacturing Systems, a company used a smart, buildable AI system to test for leaks. They could quickly swap out parts of the system to handle different products, making the work faster, easier, and cheaper.

If your AI app needs to grow, change, or get better over time (and most do), choosing mobile app development with a modular design is a smart way to keep everything running smoothly without breaking.

2. Adopt Hybrid Compute Strategies

Running everything in the cloud is simple. It’s also expensive. A hybrid compute strategy helps balance cost, speed, and system resilience.

- Lightweight models can live at the edge, reducing latency and cutting cloud calls.

- Heavy models can remain in the cloud, where they have access to more compute power.

- Model distillation or mixture-of-experts can trim resource use while maintaining accuracy.

Together, these choices act like financial tuning knobs, helping teams deploy AI efficiently without burning through budget as user traffic grows.

3. Enable Continuous Learning Workflows

Static models are like frozen food. They deteriorate the longer they sit. Real-world AI must be fed fresh data.

That’s why Suffescom Solutions conducts constant monitoring, retraining, and drift detection.

Best practices include:

- CI/CD for ML pipelines. Updates flow regularly rather than in disruptive batches.

- Automated retraining. New data fine-tunes models without waiting for quarterly overhauls.

- Human-in-the-loop validation. Algorithms improve while people retain oversight.

Think of it like building a habit: the more your AI learns from real-world use, the better it gets at doing its job reliably.

Build Scalable AI Powered Apps: Suffescom Solutions

Engineer AI That Thrives at Scale

Poor AI design undermines trust, slows innovation, and weakens adaptability.

But it doesn’t have to.

As we’ve seen, the systems that succeed are built to work well in the long-run. This is because they:

- Roll out faster

- Require fewer rebuilds

- Handle change resiliently

When teams embrace modular design, hybrid compute, and continuous learning, they sidestep the scaling issues that derail nearly half of AI projects today.

Follow these principles, and you won’t just build AI that works.

You’ll build AI that grows, adapts, and endures.